The Foundational Law

of AI Efficiency.

Engineering the architectural resilience required to sustain the next era of intelligence. We transform infrastructure from a static constraint into a fluid utility.

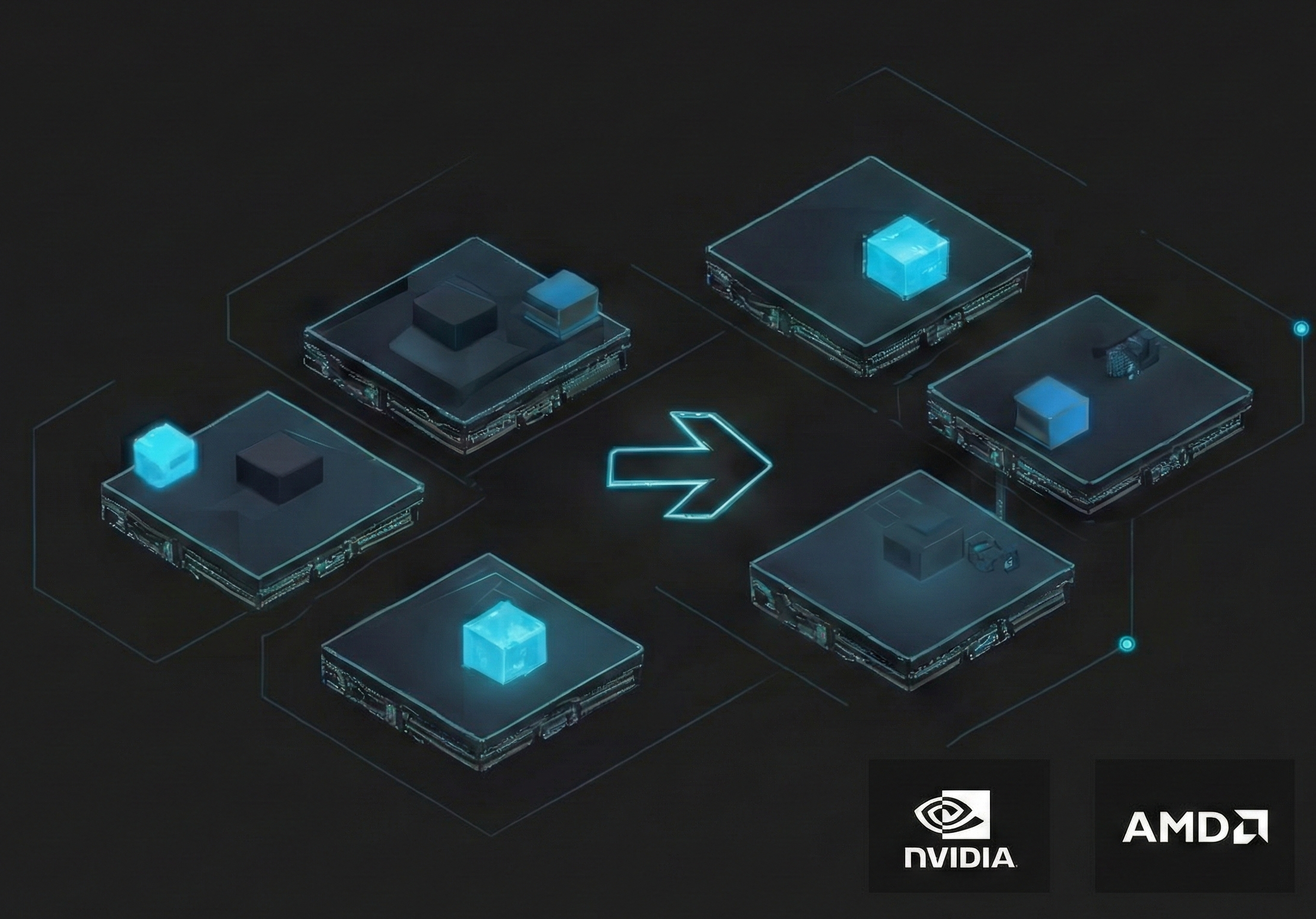

GPU Efficiency & Virtualization

Optimize and scale AI infrastructure by pooling expensive compute resources. We eliminate idle silicon to maximize cluster utilization across both NVIDIA and AMD GPU ecosystems.

Intelligent Scheduling

Our orchestration platform utilizes advanced scheduling and dynamic quotas to ensure seamless transitions from model development to inference.

Autonomous Resource Liquidity

Dynamic bin-packing and priority-based pre-emption ensure that production workloads never compete with research cycles, maintaining hardware availability at peak demand.

Agentic Observability

A highly available and horizontally scalable ecosystem engineered to monitor millions of machines. We transform raw telemetry into a unified reasoning loop.

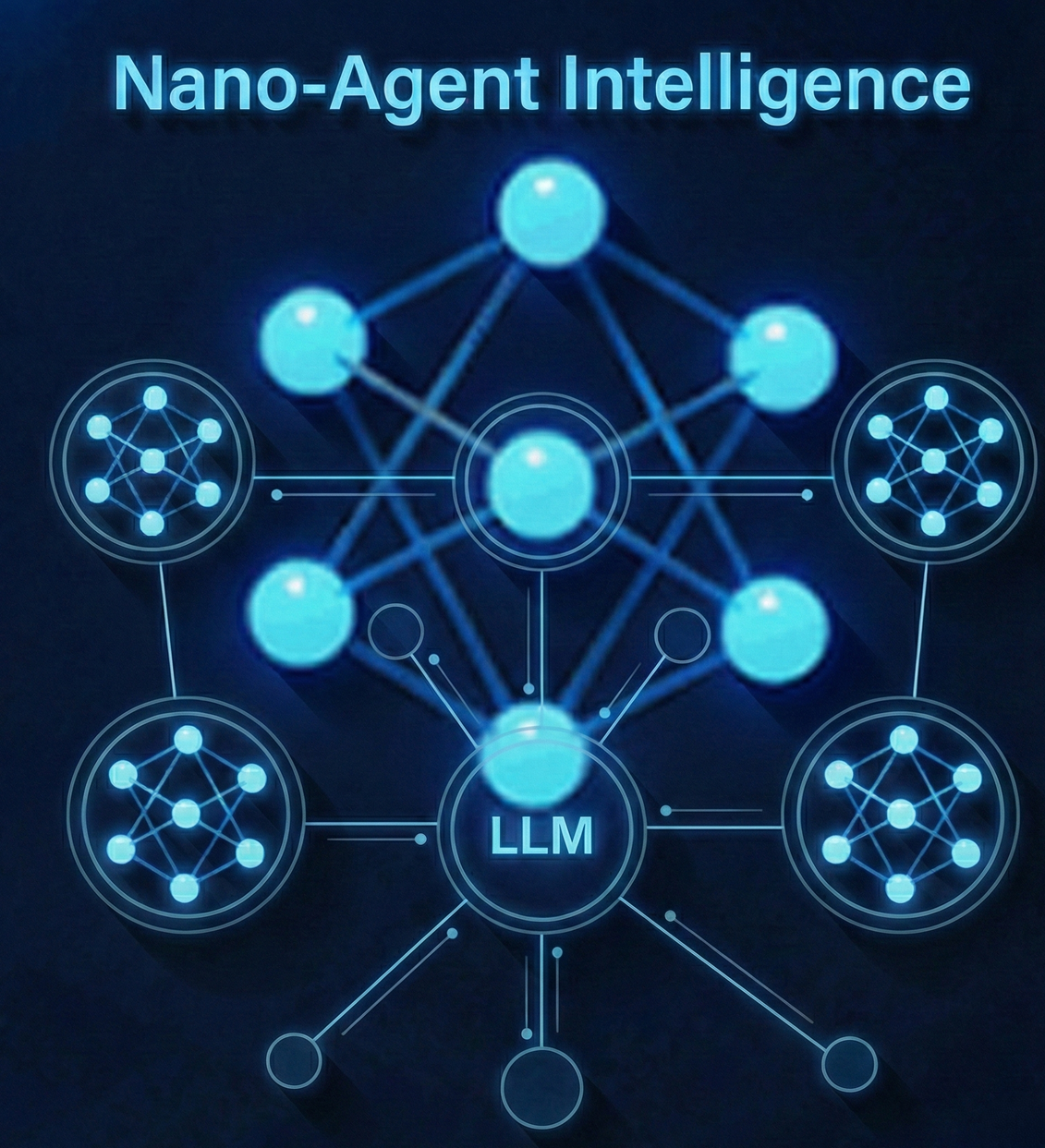

Nano-Agent Intelligence

Correlating logs, metrics, and traces through Nano-Agents ensures proactive investigation. Utilizing Bloom filters and caching, our Modular Multi-Agent System enables self-remediation at massive scale.

Orchestration Protocol

Governed by the Agent2Agent (A2A) Protocol, these agents communicate to resolve complex multi-domain failures across global enterprise clusters.

Edge Intelligence & MCP

Establishing a secure interface via the Model Context Protocol (MCP) to extract high-quality context from distributed silos. This pushes the reasoning loop to the edge for low-latency remediation.

High Availability

Designed for mission-critical reliability, the platform remains resilient across millions of nodes, ensuring zero-latency observability in demanding environments.